Time and phase coherence – live, MQA, playback, overall

This is a very complex topic. It is not fully researched which and how the issues directly influence or correlate to the subjective perceived sound quality. However the overall time and phase coherence influences the final output of an audio system. It has a significant influence on the sound quality.

Every stage of the whole chain, from live performance, recording, mixing and mastering, storing, distribution, amplification and reproduction, might take influence on the time and phase coherence of the sound.

- Live performance – engaging vs. sleepy sound experience in concert halls

- MQA – storing, distribution and digital – analog conversion

- Playback – reproduction of music, amplification and speaker output

- Over all – the whole system front to back as a black box

Live performance

Music makes you sleepy on some seats in a concert hall. The same music on other seats in the same concert hall totally engages you. On the latter seat it makes you totally feel the drama intended by the artist. Distance to stage is not what drives this effect predominantly. This is what David Griesinger from Cambridge outlines in his papers, also AES published.

Also see the blog ghost fundamental and engaging-sound

Engaging or sleepy live sound

Why is that and what does it come from?

Not talking about the typical tube versus transistor or MQA vs FLAC or even cable opinions. We talk about live performance in a concert hall, that either engages or not, depending on the hall design and the seat you are listening in.

How we perceive engaging sound

“Sounds perceived as close demand attention and convey drama. Those perceived as further away can be perfectly intelligible, but can be easily ignored. The properties of sound, that lead to engagement, also covey musical clarity. One is able, albeit with some practice, to hear all the notes in a piece, and not just the harmonies.” (Griesinger)

What makes sound engaging

Engagement associates with sonic clarity. But, currently there is no standard method to quantify the acoustic properties that promote it.

It is more than intelligibility, it is concerned with the physics of detecting and decoding information contained in sound waves. Specifically, how our ears and brain can extract such precise information on pitch, timbre, horizontal localization (azimuth), and distance of multiple sound sources at the same time.

Our ear identifies tones using its harmonics

Emmanuel Deruty published some interesting papers on how our ears identify pitch. Where he outlines, that harmonic sounds come as a set of regularly spaced pure tones. If the fundamental frequency is 100Hz, the harmonic frequencies will be 200Hz, 300Hz, 400Hz and so on. Each one of those frequencies will correspond to a particular area of the tectorial membrane in our ear. Suppose a given harmonic sound comes with its fundamental frequency plus nine harmonics. It sets, no fewer than 10 distinct areas of the tectorial membrane in vibration. This provides an abundance of coherent information to the brain. It will have no difficulty in quickly and easily finding the right pitch. That is what makes the human ear so powerful for pitch identification.

Ghost-fundamental; harmonics are more important

Together, the harmonics include more information about the pitch of a sound than does the fundamental frequency. Emmanuel Deruty further explains, there can be as many as 16 audible harmonics, setting 16 zones of the tectorial membrane in motion. The fundamental tone only puts one zone in motion.

Not only are the harmonics important, so is the spacing between the harmonics, which is constant, and repeated up to 15 times. In fact, we can simply remove the fundamental frequency, as it is so unimportant as far as pitch is concerned. Pierre Schaeffer performed this experiment earlier, during the 1950s.

Human neurology is acutely tuned to novelty

David Griesinger presents the discovery, that the information on how we precisely identify pitch, timber and azimuth is encoded in the phases of upper harmonics of sounds with distinct pitches, and that this information is scrambled by reflections. Reflections at the onsets of sounds are critically important. Human neurology is acutely tuned to novelty. The onset of any perceptual event engages the mind. If the brain can detect and decode the phase information in the onset of a sound, before reflections obscure it, we can determine pitch, azimuth and timbre. We perceive the sound, although physically distant, as psychologically close – engaging.

Perception of engaging versus sleepy music

The perception of engagement and its opposite, muddiness, are related to the perception of “near” and “far”, as David Griesinger explains. For obvious reasons sounds perceived as close to us demand our attention. We rather ignore sounds perceived as far. Humans perceive near and far almost instantly on hearing a sound of any loudness, even if they hear it with only one ear – or in a single microphone channel.

For example, if we localize reliably the inner instruments in a string quartet, we perceive the sound as engaging. When we do not localize, for example the viola and second violin, we perceive the sound as muddy and not engaging.

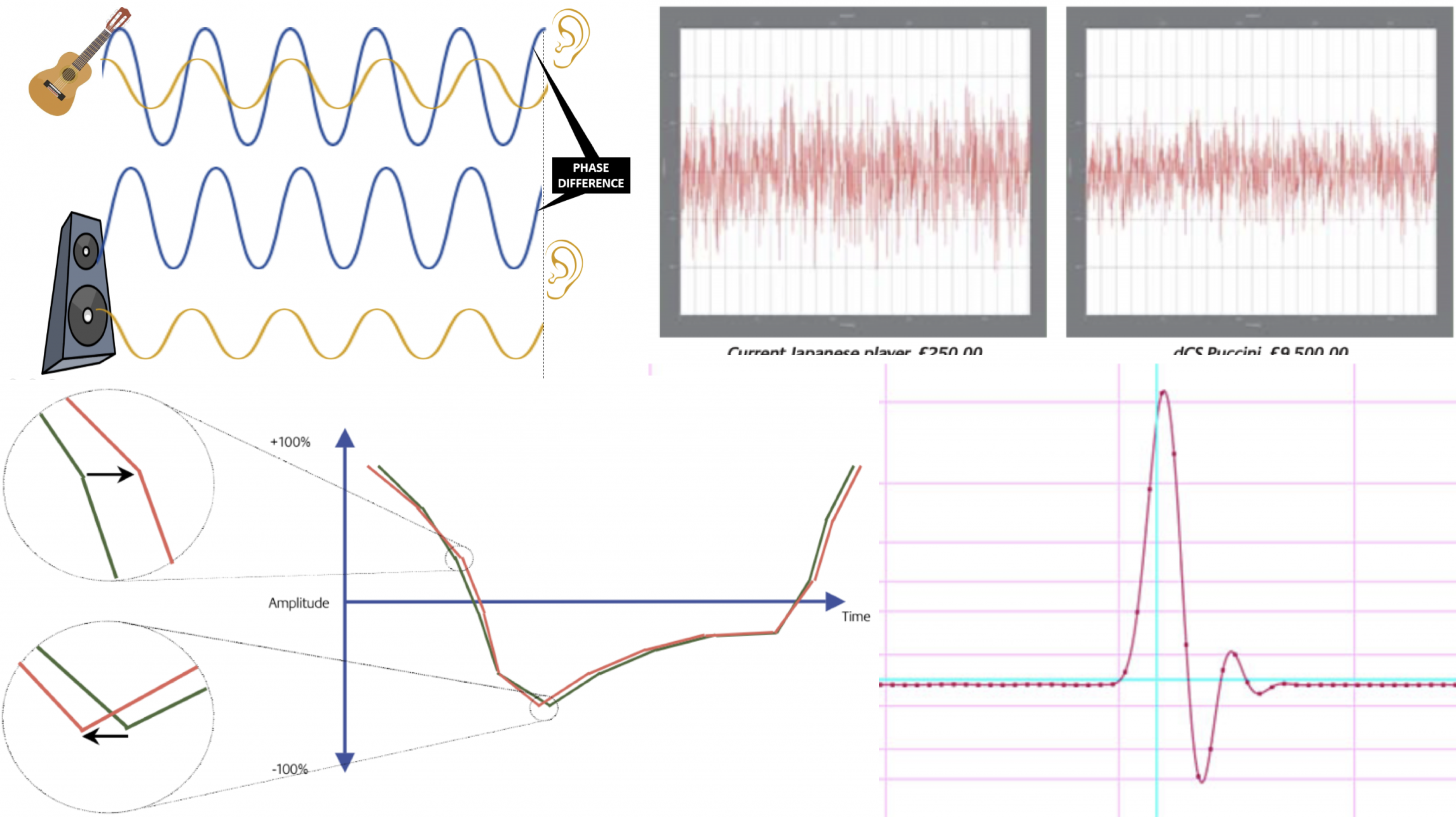

Time and phase coherence

In a live performance, very early reverberations influence the harmonics phase coherence of the fundamental tone. This leads to the muddy and not engaging sound in an imperfectly designed concert hall. It is also much more difficult to separate sound sources for such cases.

A reproduced sound with non coherent time and phase aligned harmonics to their fundamental will cause the same effect for our ears. Thus, the listening experience is more muddy and less engaging.

MQA

Tripod Of Audio Quality

Three interrelated ways perhaps define Audio quality: Dynamic range, frequency bandwidth and temporal precision. Read more at

Dynamic range

Analogue electronics have long since met the dynamic range requirement, although none of the mechanical analogue recording formats could deliver 120dB. In the digital domain, dynamic range is determined by the word length, and 16 bits, …. 24 bits is more than sufficient. … Read more at

Frequency bandwidth

Audio bandwidth …. of conventional digital audio systems is defined by the practicalities of the ‘brick-wall’ anti-aliasing and reconstruction filtering, which is directly related to the sample rate. While the use of 44.1 or 48 kHz sampling rates potentially compromises the audible bandwidth slightly, the double- (88.2/96kHz) and quad-rate (174.4/192kHz) formats are blameless in that respect, … Read more at

Temporal precision

Most of us think of audio equipment performance in terms of the frequency domain. Talking of equalisation we think of how the amplitude changes at different frequencies. Comparing equipment specifications we often rely on the frequency response as an arbiter of quality. But, this is only half the story, the other half being the time-domain performance. This is a much more relevant concept when considering digital systems, albeit one which is far less natural and intuitive for most of us. Read more at This is what is addressed by MQA.

All digital

When nowadays recording a live performance the recording signal is picked up via microphones, amplified, digitized and stored. It became quite unusual to store the recorded information analog.

The most important information in any audio recording, as everyone surely agrees, is what falls within the audioband of 20Hz–20kHz. That is the range of frequencies audible to the human ear. This audioband is adequately covered by CD’s 44.1kHz sampling rate. There is one caveat that squeezing a well-behaved antialiasing filter into the narrow transition band presents challenges. A sampling rate of 48kHz provides a bit more room for more gradual filtering.

Relevant for hearing

Such higher frequencies are above the humans hearing capability. Where as a fundamental tone within our hearing range might have its harmonics above the 20kHz. The human hearing system still might recognize and our brain might process those harmonics. To reduce the recorded frequency for the digital sampling to 20-20kHz frequency filters are used. Those frequency filters influence the time and phase coherence.

Analog in to analog out

MQA is, as Bob Stuart likes to say, an end-to-end technology: analog in to analog out.

Read more at With this end-to-end approach MQA tries to prevent for those time and phase coherence issues. But, whether MQA performs well or other solutions do better is not for judgement here.

Time and phase coherence can be influenced already at the very beginning of the music reproduction. It’s influences are carried through to the final output.

Playback

Reproduction of recorded music, in a way that we can listen to it, finally ends up being analog. However, anything that has been stored and transmitted digitally has to be converted back into an analog signal. That signal is usually amplified and transmitted via speakers into the listening environment. The last part of the chain being the loudspeakers.

Crossover

Most hifi loudspeakers use different drivers for high and low frequencies. A crossover network is used to smoothly hand over from the low to mid frequency unit and to the tweeter.

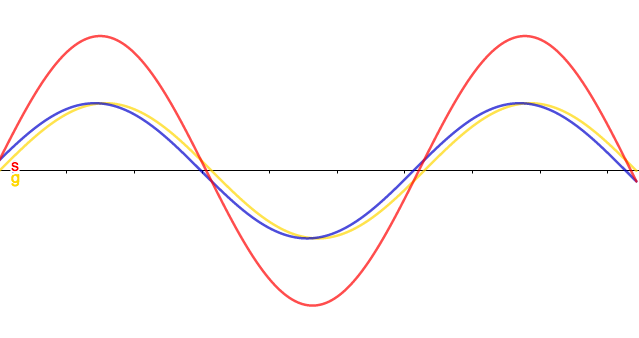

Waves of the same frequency add up depending on relative phase. Perfectly in phase they will sum to twice the amplitude, or +6 dB. 180 degrees phase shift will cancel them out.

A phase shift of 90 degrees leads to a summed signal of +3 dB.

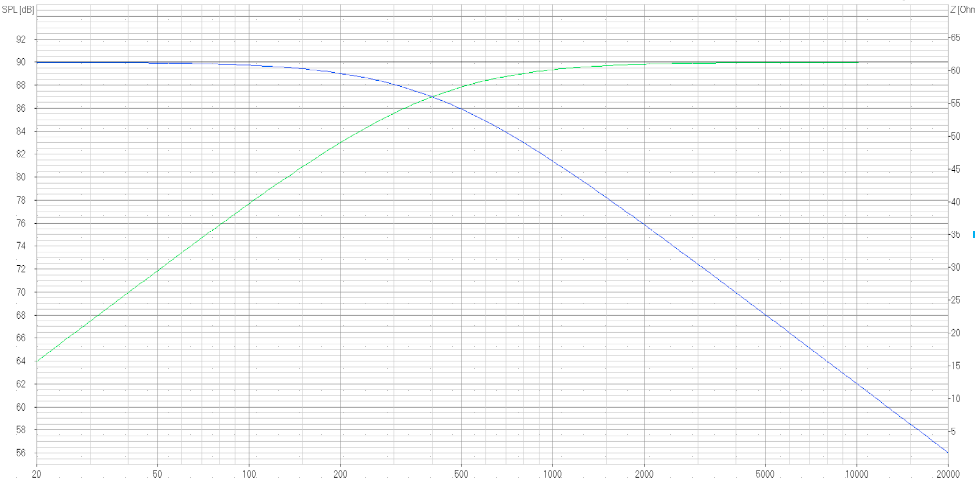

Handing over, crossing over from a mid frequency driver to a high frequency driver requires to filter out some frequencies. Higher frequencies for the mid frequency driver and vice versa lower frequencies for the higher frequency driver. Such filtering can not switch on and off immediate but reduces amplitudes for frequencies above or below the threshold slowly. The order of such filters define how slow. The order describes the dB reduction per octave, first order 6dB/octave, second 12dB/octave and so on.

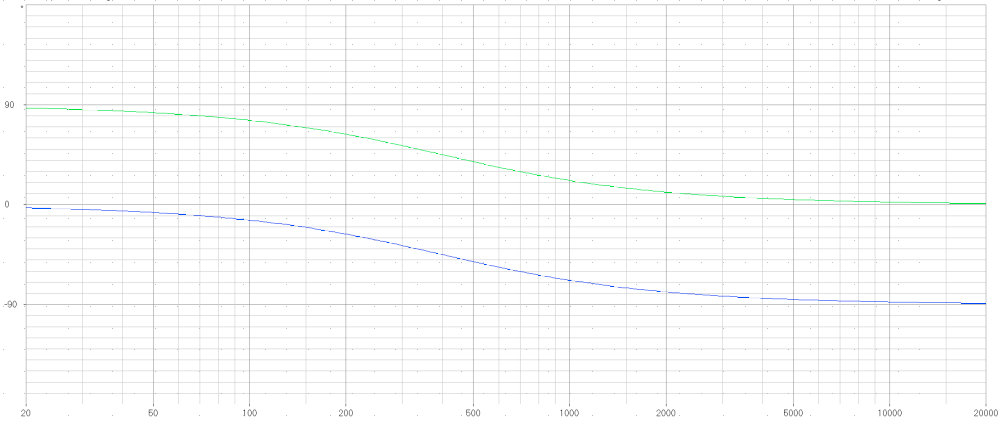

SPL 1st order

Phase impact

Each filter also changes the phase of the signal. The slopes of that crossover determine the phase response of the loudspeaker at the crossover frequency. Steeper slopes introduce larger phase changes and in addition positioning and polarity of the drivers also influence time coherence between the drivers. First order 90 degree, second order 180 degree, 4th order 360degree and so on. Shifting phase also changes time of the signal.

1st order phase

Time impact

At the crossover frequency, LF is radiated with a phase delay of 90 degree behind the tweeter for 1st order filters. As such, for 400 Hz that would be a delay of one-quarter cycle, or 1/(4*400) = 0.625 ms. Further more, the 180 degree phase shift of a 2nd order filter introduces 180 degree LF phase lag compared to the tweeter output, which will therefore lead a 400 Hz pulse by 1,25 ms.

More on this in Davids blogs: loudspeaker phase response; phase and time 1; phase and time 2; phase and time 3;

Over all

The sound system as a black box

Take the whole audio replaying system as a black box. There is a recording of any kind. And there is an output of the black box, that we can listen to.

The input to such a black box or a complete audio system would obviously be a music source. However, we will not differentiate on what type of source, as it is irrelevant for this discussion. Except that we have to exclude pure analog systems, where no digital component is in the chain, including the source.

Comparing the input signal with the output signal

The output pick-up place significantly influences how much the output differs from the input. Hence, picked-up at the listening position the ambience drastically influences the received output. In an anechoic chamber the output might not contain ambience influence. Still, it contains the significant influence of the speaker with its design, crossovers and several drivers. While the comparing of the input signal is much easier, if it is done to the electrical signal before the speakers.

Between the input signal and before the speakers there are still many steps and components involved. Al those might influence the time and phase coherence.

Significant timing variations

A very interesting new approach to audio measurement by Nordost has delivered surprisingly interesting results on timing variations comparing input and output signals.

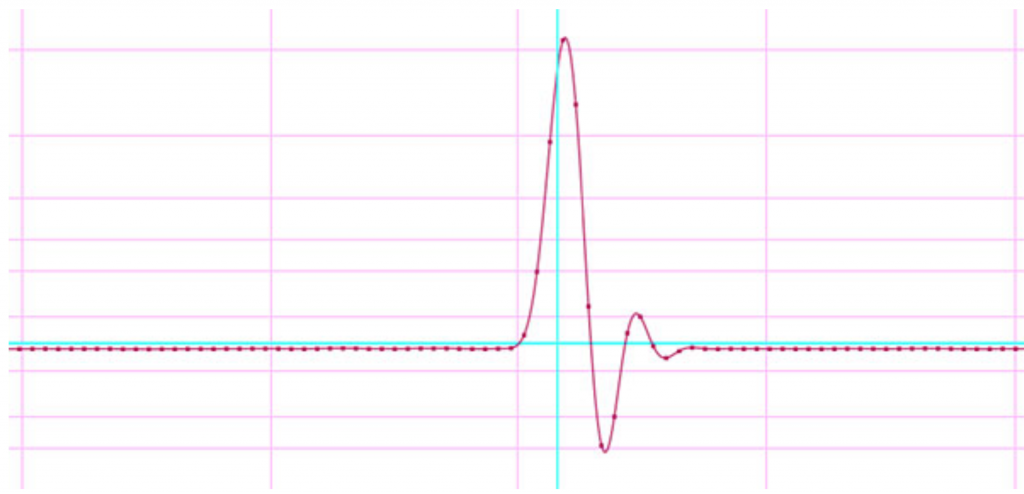

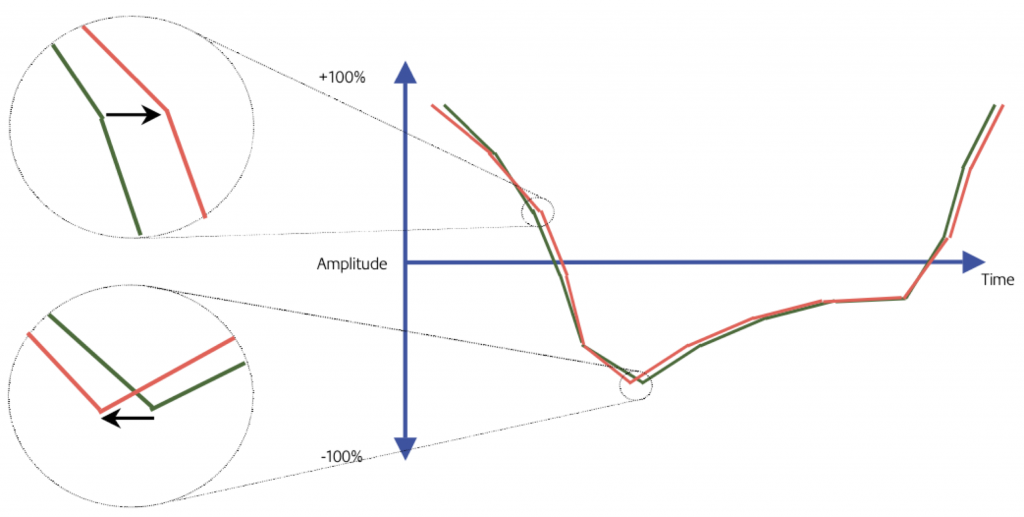

Comparing a CD input signal with an CD players output signal on sample by sample basis and taken out group delay, they found situations like this. (Green input; red output)

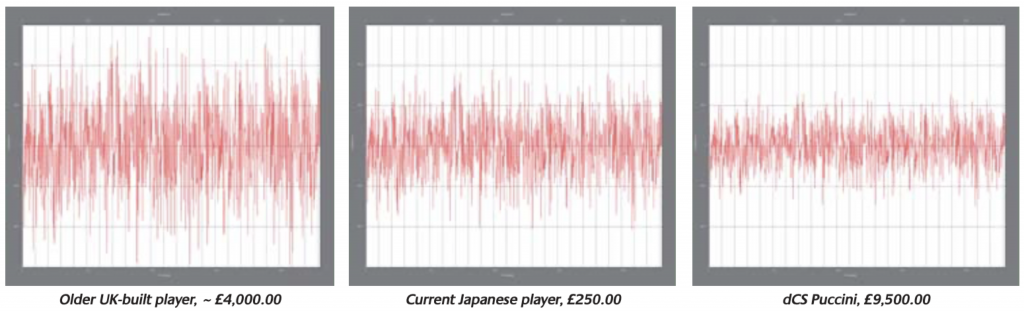

„Running a second algorithm allows Acuity to measure those errors (plus and minus) against time, producing a readout that looks like this:

Here, each red line represents an error, the longer it is, the greater that error. If the player’s output matched the input signal, the result would be a single horizontal line. As you can see, in the case of the test player, the deviation is significant. How significant? Well, the vertical graduations on the plot are 10 microseconds each, and as you can see, there are plenty of examples in which peak-to-peak errors of over 40 microseconds (or two complete sample periods) occur!“

They compared several CD players. Their subjective listening experience matched the measured results.