Why isn’t time and phase a prominent topic in audio?

Stereo channel time and phase coherence

Sound mixer or sound engineer proactively design the sound with in-phase or out-of-phase channels. More mono able sound, i.e. channels in-phase will sum-up in levels. Amplitudes of same frequencies of both channels sum-up, i.e. double. This delivers a less wide sound stage, stereo image, but more punch and clarity. While more out-of phase channels deliver a wider stereo image, more space, more roomy, wider sound stage.

Time and phase coherence across the frequency range

Time and phase are very relevant as well, also when not comparing two stereo channels but rather looking into a mono signal. Mixing a base-drum together with a bass requires accurate time and phase handling to improve punch and prevent smear or muddiness. All stages of the whole audio signal chain interfere time and phase. Two frequencies, such as 60Hz and 300Hz might have several hundred milli seconds time difference. Although the bass created both frequencies (fundamental and harmonic) at the same time. Keeping in mind a three way speaker, the bass speaker, the midrange and the tweeter are many times neither in phase nor in time. Bass speakers often onset much later than midrange and tweeter.

How we hear

What we call ‘sound’ is in fact a progressive acoustic wave — a series of variations in air pressure, spreading out from whatever source made the sound. When these pressure variations strike the ear, they find their way through the external auditory canal to the tympanic membrane, setting it into vibration. The signal is thus converted to mechanical vibrations in solid matter. These vibrations of the tympanic membrane are transmitted to the ossicles, which in turn transmit them to the cochlea. Here the signal undergoes a second change of nature, being converted into pressure variations within liquid. These are then transformed again by specialised hair cells, which convert the liquid waves into nervous signals. [SOS]

There is a very interesting article on our human hearing capabilities: High-resolution frequency tuning but not temporal coding in the human cochlea

It addresses a side topic, however gives some very interesting insights on how important time and phase are, especially up to 1300Hz:

The cochlea decomposes sound into bands of frequencies and encodes the temporal waveform in these bands, generating frequency tuning and phase-locking in the auditory nerve (AN).

We obtained the first electrophysiological recordings, to our knowledge, of cochlear potentials, which address both frequency tuning and temporal coding in humans with normal hearing.

The situation is somewhat different for coding of fine-structure in humans, for which the discussion has been entirely based on behavioral research, and no attempt has been made to obtain direct measurements in humans. It is undisputed that coding of sound fine-structure is a prerequisite for binaural temporal sensitivity at low frequencies, with an abrupt upper limit at approximately 1.3 kHz. Such coding has been proposed to be important for other auditory attributes as well, at frequencies as high as 10 kHz or more, but this is debated.

How we identify pitch

As referenced several times, the Sound on Sound Article, How The Ear Works:

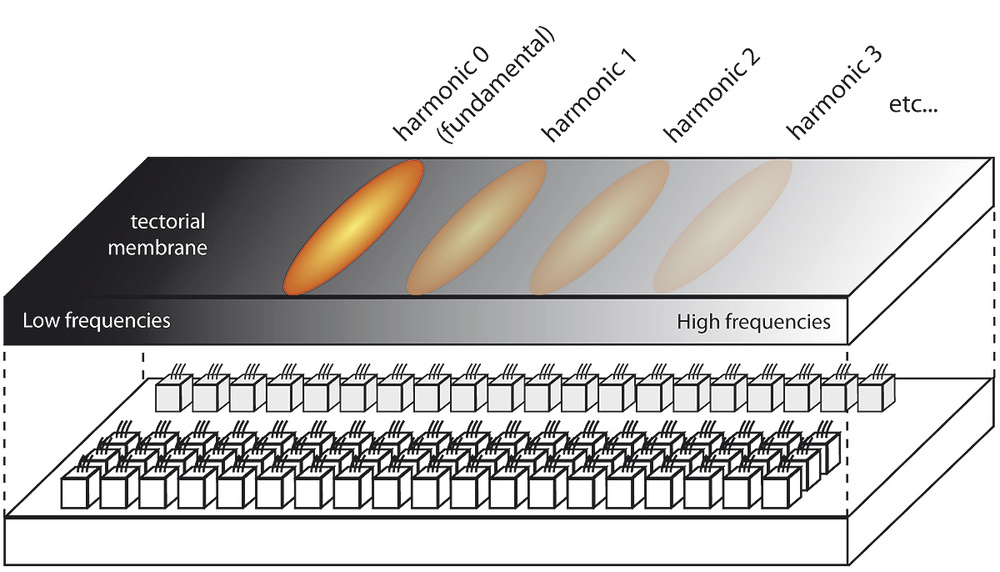

Clochea

Harmonic sounds come as a set of regularly spaced pure tones: if the fundamental frequency is 100Hz, the harmonic frequencies will be 200Hz, 300Hz, 400Hz and so on. As shown in Figure 5, each one of those frequencies will correspond to a particular area of the tectorial membrane. Suppose a given harmonic sound comes with its fundamental frequency plus nine harmonics. In this case, no fewer than 10 distinct areas of the tectorial membrane will be set in vibration: this provides an abundance of coherent information to the brain, which will have no difficulty in quickly and easily finding the right pitch. This is what makes the human ear so powerful for pitch identification.

MQA – lossless – high temporal resolution

MQA is a quite distributed format that claims to solve the temporal issue quite comfortably.

A very deep going article on MQA can be found here, where Bob Stuart talks about Stephan Hotto’s (Xivero) published „Hypothesis Paper“ on MQA:

There are three main claims of MQA we like to look at:

1.) MQA achieves a high temporal resolution because of the filters and sampling techniques used.

2.) MQA compresses a 192kHz / 24Bit recording transparently in a lossless manner into a 48kHz / 24Bit baseband.

3.) MQA claims to improve the sound quality of the original recording.

Again, I do not want to question the abilities of MQA. However I do want to highlight, that MQA claims to solve temporal issues. Formats with 44.1kHz 16bit or 48kHz 24bit sampling rate require digital filtering techniques. Those filters do have influence on time and phase. If that would not be important, why would we need solutions like MQA?

Analog is not a free ticket

Analog systems consist of many electric components. Some of those components raise some interesting questions. A class A/B analog tube amplifier separates the positive from the negative sound signal. Those two signals create the A and the B path. The amp-output delivers obviously the concatenated one signal. However, this combined signal, rather often contains time and phase issues.

Any analog audio circuit contains resistors and mostly also capacitors and coils. Those pure analog components in combination create filters. RC low-pass filters and LC high-pass filters influence time and phase of the signal. That appears in amplifiers but in speakers frequency crossovers as well.

Time and phase influence

Variances of time and phase can influence the distance between the fundamental ton and the relating harmonics. Keeping in mind how we identify pitch, any such time and phase influence might significantly alter our pitch identification. As referred in my last blog, David Griesinger explains that pitch is most relevant for localization of tones.

Might be not such important – really?

Relationship of harmonics and fundamentals in terms of distance (time) and phase seem to influence sound quality significantly. It is about wideness of the virtual sound stage. It’s about muddiness versus clarity and punch in sound. It is about engaging versus boring music. Isn’t that it what music is all about?

Why is that topic not all over the place? Why is time coherence not a key information on any spec sheet?